Introduction

We are witnessing a significant proliferation of AI assistants and agents designed to interact with humans across all domains. This rapid advancement has brought a critical challenge: the lack of rigorous, systematic approaches to evaluating these AI systems.

Current industry practices for evaluating Large Language Models (LLMs) systems often rely on domain-expert evaluations. While human evaluation may be reliable, it is prohibitively expensive, time-consuming, and challenging to scale. Moreover, if not carefully executed, such assessments can be prone to subjectivity, potentially compromising the evaluations.

A recent trend has emerged to address these limitations: LLM-as-a-judge, or Language Model (LM) evaluators, to simulate human evaluation and automatically assess the quality of responses from LLM-powered systems.

Frontier models, such as GPT-4 and Claude 3.5 Sonnet, have become the de facto standards for LLM-based evaluations. However, using proprietary models as evaluators of AI applications raises significant concerns:

| Closed-source | The closed-source nature of these models limits transparency and customization, hindering developers' ability to understand or refine the models. |

| Uncontrolled versioning | Uncontrolled versioning introduces consistency challenges, potentially causing unexpected changes in LM evaluator behavior and performance metrics over time. |

| Costs | The costs of API usage can be prohibitive, especially for startups or companies operating at scale. This could impact the economic viability of LM-based evaluators for LLM-powered applications. |

| Privacy and data security | These models require sending potentially sensitive application data to external servers, risking the exposure of confidential information in organizations with strict data governance policies or those operating in regulated industries. |

The release of Llama 3.1 405B Instruct [1] opened the door for a frontier open-source model that could be used as a powerful evaluator. However, the model's size makes running evaluations impractical and expensive.

While there have been significant strides in the open-source community to train robust LM evaluators, notably the Prometheus models and other alternatives [2-5], there remains room for innovation and research, especially in LLM system evaluations. This is particularly true with flexible, small LM evaluators targeting LLM systems instead of general model evaluations.

Over the past months, we have been building the first small open LM evaluator trained on evaluation data for LLM systems.

Flow Judge: an open small language model for LLM system evaluations

Today, we introduce Flow Judge, an open, smaller-scale LM evaluator that achieves comparable performance to larger models such as GPT-4o and Claude 3.5 Sonnet, and popular open models like Llama 3.1 8B Instruct [1] on a variety of evaluation benchmarks.

Our model offers several unique advantages:

- Small but mighty: Flow Judge is a 3.8B model – much smaller than existing models used for LM-based evaluations – making it more accessible and easier to deploy in various environments. Despite its smaller size, Flow Judge achieves a high correlation with standard evaluators like GPT-4o and Claude 3.5 Sonnet, rivaling the performance of larger models in our held-out dataset and out-of-domain benchmarks such as RAGTruth [6] and HaluEval [7].

- Customizability: Flow Judge can follow custom evaluation criteria established by domain experts, enabling tailored assessments across various fields.

- Supports various scoring scales: Flow Judge can grade responses using rubrics in three different scoring scales: Pass / Fail or error detection, 3-Likert, and 5-Likert.

- Qualitative feedback: Flow Judge detects errors and grades outputs and provides qualitative feedback that explains its reasoning for assigning a particular score from the rubric while highlighting problematic parts of the responses.

- Structured evaluation outputs: Flow Judge was trained to produce structured evaluations with

<feedback>and<score>tags. - Open and accessible model: Flow Judge is released under the Apache 2.0 license, so it is free for any developer or company. Given its small size, it is perfect for anyone who wants to run evaluations cheaply and quickly with their own rubrics.

Flow Judge can contribute to the community by improving LM-based evaluations and establishing evaluation strategies that can systematically produce better LLM systems.

This technical report delves into Flow Judge's data construction process, architecture, training, and performance metrics.

Dataset construction

Our dataset construction process draws inspiration from [2,3,8-11], specifically focusing on aspects crucial for LLM system evaluations in generative AI products rather than model evaluations.

Previous work [2,3] has demonstrated that evaluation rubrics can be used to synthetically generate evaluations for fine-tuning LM evaluators. However, the datasets used in this work centered around model evaluations, containing single instructions and responses from a language model.

We devoted significant effort to synthetically generating training instances with several inputs, not only instructions. These included, for example, user queries and retrieved contextual information. We also generated custom rubrics that AI practitioners could utilize for AI products in different industry verticals, such as legal, manufacturing, and more.

To minimize human intervention and reduce ambiguity in the creation of the evaluations, we employed a dual-evaluation strategy with consensus to create the evaluation data used for training and assessment of Flow Judge.

In the subsequent sections, we briefly explore the curation of seed rubrics, followed by the synthetic generation of domain-adapted rubrics and training data. Finally, we present our consensus method.

Manual data curation of seeds

Our data creation method relies on curating seed metrics and rubrics, which serve as the basis for creating domain-adapted evaluation criteria and rubrics. At the same time, these will serve as seeds for generating input data for different domains and tasks.

After conducting extensive research on the most commonly used evaluation metrics in LLM systems, we categorized metrics relevant to LLM systems as follows:

| Response Quality | Assessing the overall quality of the AI's responses. It includes metrics that evaluate the correctness, relevance, and coherence of the generated content. |

| Retrieval Quality | Particularly relevant for retrieval-augmented generation systems. They assess how well the system retrieves and utilizes relevant information to answer queries. |

| Bias and Toxicity | Addresses ethical concerns in AI outputs, checking for harmful content or unfair biases in the generated responses. |

| Style and Guideline Adherence | Evaluates how well the AI system can adapt its output to match specific tones, styles, or guidelines provided by the user or system requirements. |

| Language | Focuses on the linguistic aspects of the AI's output, including grammar and readability. |

| Miscellaneous | Includes various specialized metrics that don't fit neatly into the other categories. They assess abilities like logical reasoning, strategic planning, and following complex instructions. |

For a complete list of seed metrics and definitions, see Appendix A.

We set a consistent format for the seeds, typically as one-line statements that measure specific aspects of performance. These descriptions were designed to be domain-agnostic, concise, and clear, avoiding aggregated metrics that combine multiple concepts.

A small team of human annotators was provided with formatting instructions that aimed for clarity and consistency across metrics, emphasizing simplicity and avoiding ambiguity.

Three scoring rubrics, one for each supported scoring scale, were curated for each metric. To enhance the quality and efficiency of this process, strong LLMs were employed to augment human capabilities in curating the seed rubrics. However, it's important to note that all seed rubrics underwent a careful human review to ensure the high quality of the curation process.

The curation process yielded 31 distinct metrics with three scoring rubrics per metric. A total of 93 unique data points for subsequent augmentation with domain metadata.

Seen and unseen rubrics

To prevent data leakage and ensure robust evaluation of our held-out dataset, we split our dataset into train, validation, and test sets. By creating the splits at this stage in the data creation pipeline, we guaranteed that the validation and test sets contained entirely unseen metrics and rubrics that the model would not encounter during training.

| Split | Percentage (%) |

|---|---|

| train | 75 |

| validation | 10 |

| test | 15 |

Domain-adapted metrics

Building upon the work in [9,11], we used a systematic approach to generate high-quality, domain-adapted evaluation criteria and rubrics. Our approach utilizes predefined domains as metadata to transform generic seed rubrics into domain-tailored versions.

We choose the domains based on the potential of generative AI systems being used there. We aimed to tailor the generic seed metric to the target domain, creating a more diverse set. We selected 14 domains: Legal, Healthcare, Finance, Education, Customer service, Marketing, Human resources, E-commerce, Travel and Tourism, Technical support, Personal assistant, Biomedical, Manufacturing, and Logistics.

We employed strong language models to adapt the seed metrics and rubrics to specific domains while maintaining the core evaluation criteria involved in the evaluation.

To ensure diversity in the augmented metrics, we randomly sampled the seed metrics and routed them to GPT-4o and Claude 3.5 Sonnet with temperatures ranging from 0.7 to 0.9.

The augmentation process was applied to each 93 metrics across the 14 domains, resulting in a dataset containing 1,302 domain-adapted metrics and rubrics.

Increasing the complexity and diversity

During our systematic quality assurance review, we identified that the synthetically generated domain-adapted criteria and rubrics were usually of low to moderate complexity compared to the evaluation needs in the real world. We made this assessment based on our extensive experience building LLM systems.

Previous works [8] demonstrate that varying complexity levels in the training dataset can yield better results. We decided to enhance the complexity and language diversity of our evaluation metrics. We used LLMs to rewrite evaluation criteria and rubrics to include more complex evaluation setups. After this, we combined these newly generated criteria and rubrics with the original dataset.

# GOAL

Your job is to evolve the given evaluation metric into a more detailed and complex version to improve the quality and diversity of a dataset of metrics that will be used to test an AI system.

# ORIGINAL METRIC

Here is the original metric that needs to be evolved, in JSON format:

<custom_evaluation_metric>

```json

{EVALUATION_METRIC}

```

</custom_evaluation_metric>

# INSTRUCTIONS FOR THE EVOLUTION

To evolve the metric, please strictly follow the guidelines below.

## You are allowed to:

- Modify the name of the metric

- Increase the complexity of the metric description

- Increase the complexity of the variable descriptions (but do NOT create more variables or drastically change their meanings)

- Increase the complexity of the evaluation criteria and score descriptions in the rubric (but do NOT change the score values or scoring scale)

- Increase the length of descriptions by 20-30 words to increase the complexity

## You are NOT allowed to:

- Include in the variable descriptions the actual values the variables should hold, the type of data, or the format.

- Add new variables or drastically change the meaning of existing variables

- Change the score values or scale defined in the rubric

# OUTPUT FORMAT

Please output the new evolved metric in JSON format inside <new_evaluation_metric> tags. Do not include any other surrounding text or explanation outside these tags.

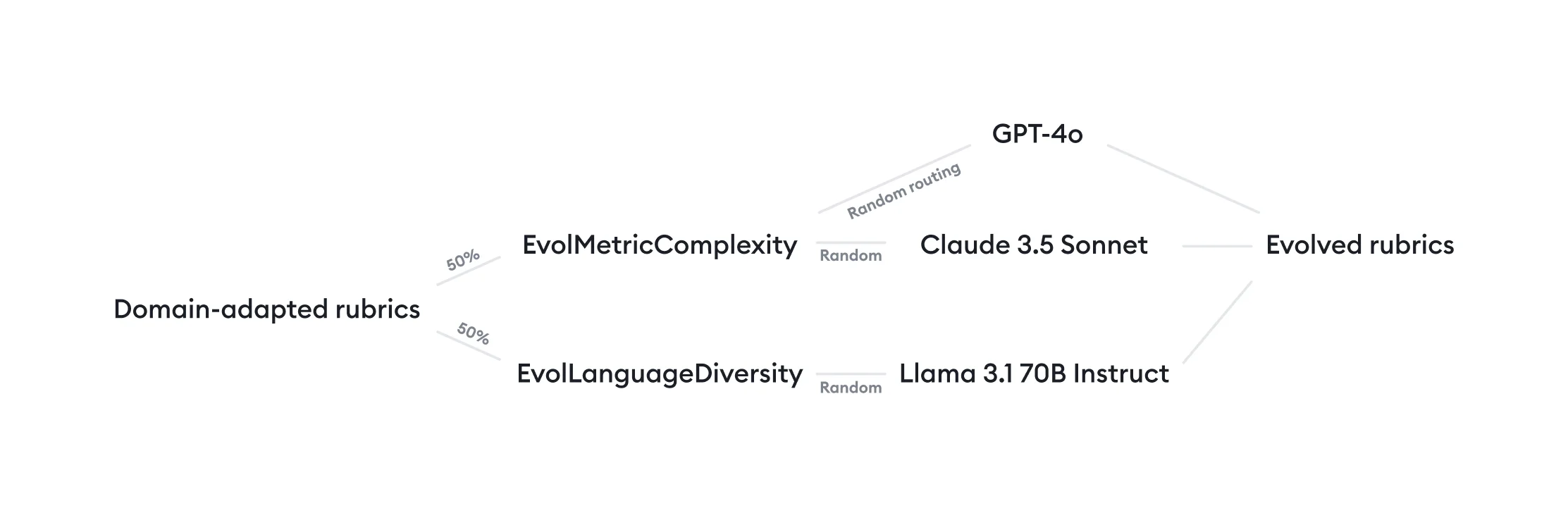

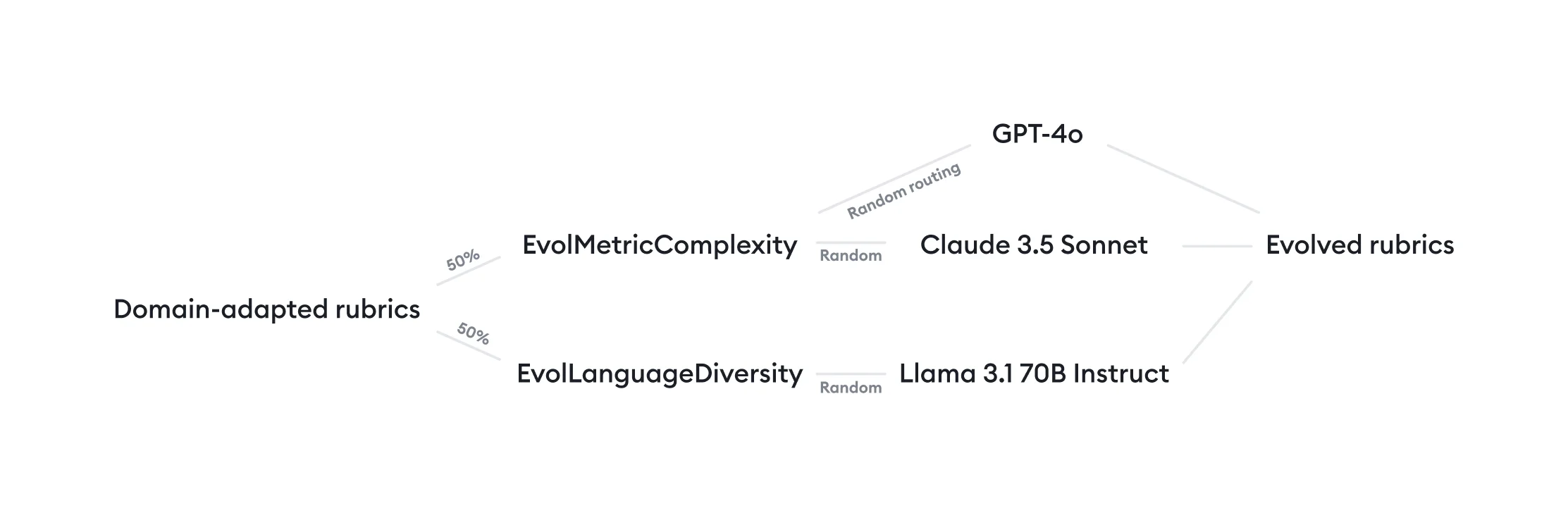

We divided our metrics dataset, allocating 50% of the metrics to complexity evolution and the remaining 50% to language diversity evolution.

For the complexity evolution, we leveraged the strong reasoning capabilities of GPT-4o and Claude 3.5 Sonnet, randomly routing samples to these models with consistent temperature settings to ensure controlled variability. For the evolution of language diversity, we utilized Llama 3.1 70B instruct [1] as a paraphraser since the task requires less reasoning.

This data creation step resulted in an additional 1,302 metrics and rubrics. These were then mixed with the original 1,302 domain-specific metrics, yielding a dataset of 2,604 metrics and rubrics.

These are the splits of the final metrics dataset:

| Name | Count |

|---|---|

| train | 1992 |

| validation | 260 |

| test | 431 |

Synthetic generation of instances from rubrics

We then implemented the next step in the data creation process, which used all the metrics as seeds to generate data across the 14 domains and for different tasks. Each instance was derived from a specific metric, incorporating the evaluation criteria, rubric, metric description, and the input-output specifications of the generative task to be evaluated.

One of the primary challenges in creating a synthetic dataset with evaluations is the need to prompt language models to produce outputs of varying quality, including those that would receive lower scores according to the rubric. To address this, we adopted an approach similar to that described in [2] for creating the Feedback Collection dataset:

- We incorporated a target score in the generation prompt.

- For each score level defined in the rubric, we generated a corresponding instance, including inputs and output.

Note that target scores were not used as final evaluation scores, and actual evaluation data is generated at a subsequent step via the consensus method.

The result was a substantial dataset of 7531 instances spanning different metrics, domains, and scoring scales.

| Name | Count |

|---|---|

| train | 5120 |

| validation | 1045 |

| test | 1276 |

Addressing verbosity biases

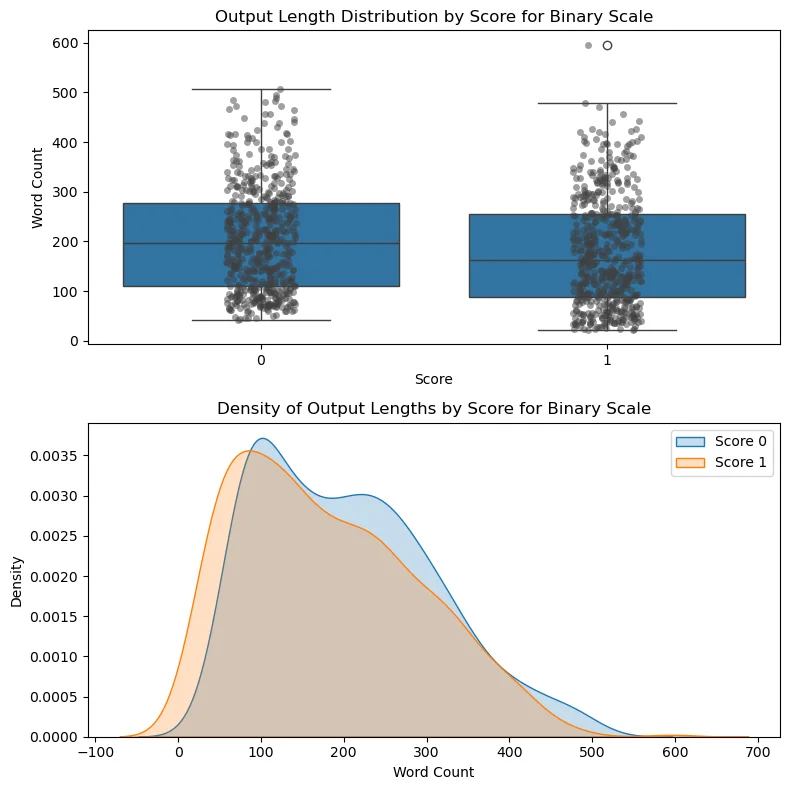

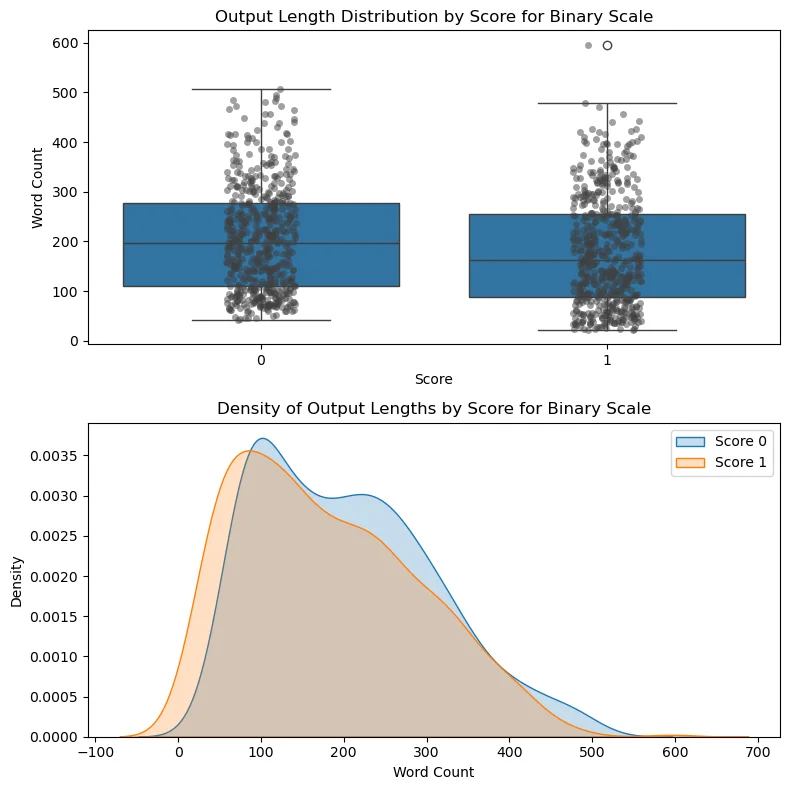

Previous research [12, 13] has demonstrated that LLMs tend to prefer longer, more verbose outputs. To account for this, we conducted a thorough analysis of our generated dataset to identify whether there were strong correlations between high scores and longer responses.

Our analysis revealed significant verbosity biases across different scoring scales. We observed a consistent positive correlation between higher scores and longer text outputs or responses, suggesting that the models generally rated more detailed and extensive responses higher. Conversely, concise outputs correlated with lower scores.

To address these verbosity biases, we implemented a straightforward yet effective transformation:

- We prompted a language model to increase the lengths of outputs with scores different from the highest by a specific number of words but preserve the content and style of the response.

- The number of additional words was determined by calculating the difference in median output lengths between each score level and the highest score.

Since the difference was usually between 20 and 40 words, the risk of varying the quality of the response in this transformation was low. This verbosity bias mitigation strategy sought to train our model to focus on substantive aspects of the responses rather than being influenced by verbosity.

Synthetically generating evaluations for training

The final step in our synthetic data creation process involved creating actual evaluation data for training Flow Judge. We prompted LLMs to produce verbal qualitative feedback before assigning the final score to the response since it has been proven to have a positive effect similar to chain-of-thought prompting techniques [2,3]. This sequence aims to emulate human evaluation processes, where reasoning precedes numerical scoring.

The two components of the evaluations are:

- Feedback: A detailed paragraph containing the LM evaluator's reasoning for the assigned score. This feedback also highlights any problematic areas in the generation, providing a rich context for the evaluation.

- Score: A numerical score derived from the specific rubric associated with the evaluation metric.

An example output of the evaluation:

<feedback>

The HR response provided is well-structured and offers practical advice on handling workplace conflicts.

…

Overall, the response offers some value but lacks the depth of originality and insight required for a higher score.

</feedback>

<score>

2

</score>

We prompted the LLM to produce structured outputs using XML tags to create a reliable parser.

To reduce ambiguity in our training labels, we implemented a dual-evaluation strategy. We obtained two independent evaluations: one from GPT-4o and another from Claude 3.5 Sonnet. Both models were configured with a temperature of 0.0 to promote consistency in their outputs.

The final label for each evaluation was determined through a consensus method, combining the insights from both evaluators. We established specific criteria for handling cases of significant disagreement. For instance, assessments with completely opposing scores were discarded.

In the final phase of our data creation process, we analyzed score distributions within our dataset. This revealed score imbalances in all scoring scales. To address this issue and create a more balanced dataset, we applied undersampling techniques to reduce the prevalence of majority classes. This step was crucial in mitigating potential biases in the training data and ensuring that Flow Judge would be equally adept at identifying and evaluating responses across the entire spectrum of scores.

After the consensus and discarded evaluations, we obtained a total of 5,102 evaluations. Below is a breakdown of the dataset.

| Pass / Fail | |

|---|---|

| Split | Count |

| train | 957 |

| validation | 56 |

| test | 316 |

| 3-Likert | |

| Split | Count |

| train | 1342 |

| validation | 187 |

| test | 300 |

| 5-Likert | |

| Split | Count |

| train | 1295 |

| validation | 375 |

| test | 274 |

Human review to ensure quality

After each step in the data generation pipeline, we randomly selected 100 data points for human review. This process focused on identifying problematic patterns or inconsistencies in the synthetically generated data.

We employed an iterative approach: first, we generated a small batch of data, reviewed it, addressed any identified issues, and then proceeded to generate the entire dataset. This method allowed us to catch and rectify potential problems early in the process, ensuring higher overall quality.

We integrated Argilla's user interface into our workflow to streamline the review process. By implementing this focused QA process, we maintained high data quality standards throughout the creation of our synthetic dataset.

Model Information

Architecture inherited from Phi-3.5-mini

Flow Judge is based on the Phi-3.5-mini architecture, and the base model checkpoint used is specifically its instruct version. The model uses the same tokenizer, supports MQA and Flash Attention 2, and has weights in bfloat16 precision.

However, post-finetuning, the model's support for languages and long context lengths has not been fully tested. Due to specialized Supervised Fine-Tuning (SFT), Flow Judge might show different benchmark results and support a maximum context length of 8192, shorter than the base model's.

Base model information

Phi-3.5-mini, a Transformer-based language model with 3.8 billion parameters, was unveiled by Microsoft in August 2024. This iteration supports a 128K token context length, enhancing the previous Phi-3 Mini version from June 2024. [14]

Microsoft's efforts build on the "Textbooks Are All You Need" approach, leveraging high-quality training data to boost the performance of smaller language models, diverging from typical scaling laws. [15]

A notable feature is its size and, therefore, its capability to run on resource-constrained devices. The phi-3-mini model can be quantized to 4-bits, reducing its memory footprint to roughly 1.8GB. Tests showed the quantized model efficiently runs on an iPhone 14 with an A16 Bionic chip, achieving a processing rate of over 12 tokens per second offline and natively. [16]

Post pre-training, the model underwent chat fine-tuning through Supervised Fine-Tuning (SFT) and Direct Preference Optimization (DPO), significantly improving its multilingual, multi-turn conversational quality and reasoning abilities. [17]

The chat template is of the following standard format:

```

<|user|>

Input

<|end|>

<|assistant|>

```

Phi-3-mini shares a structural similarity with Llama-2, utilizing the same tokenizer with a 320,641 token vocabulary. This compatibility ensures that Llama-2 packages can be easily adapted for phi-3-mini. The model architecture includes a 3072 hidden dimension, 32 heads, and 32 layers. [18]

Base model architecture

Phi-3.5-mini has 3.8B parameters and is a dense decoder-only Transformer model using the same tokenizer as Phi-3 Mini.

| Inputs | Text. It is best suited for prompts using a chat format. |

| Context length | 128K tokens |

| GPUs | 512 H100-80G |

| Training time | 10 days |

| Training data | 3.4T tokens of public documents, high-quality educational and code data, synthetic "textbook-like" data, and high-quality supervised chat data for human preferences. |

| Dates | Trained between June and August 2024 |

| Status | This is a static model trained on an offline dataset, with a cutoff date of October 2023 for publicly available data. As we improve the models, future versions of the tuned models may be released. |

| Supported languages | Arabic, Chinese, Czech, Danish, Dutch, English, Finnish, French, German, Hebrew, Hungarian, Italian, Japanese, Korean, Norwegian, Polish, Portuguese, Russian, Spanish, Swedish, Thai, Turkish, Ukrainian |

| Release date | August 2024 |

Fine-tuning

Data preparation

Raw inputs formatted and string interpolated to the Phi 3.5 prompt template

We started with raw input data where each required element ['inputs', 'output', 'criteria', 'rubric', 'feedback', 'score'] resides in separate columns. These columns are formatted and interpolated into final prompt strings for supervised fine-tuning.

Since phi-3.5-mini-instruct is already fine-tuned for chat, we adopted the same chat template structure in our finetuning.

Reading the input datasets, preprocessing & double checking results

Once the datasets were formatted into the required chat format, axolotl fine-tuning framework read them in with the correct configuration. It merged and shuffled the dataset splits—3-likert, 5-likert, and binary—separately for both training and validation. [19]

Here is an example of how we read the chat data in Axolotl:

```yaml

chat_template: phi_3_5

datasets:

- path: "flowaicom/e_evals_dataset_likert3_undersampled-v0.1-axolotl"

ds_type: parquet

type: chat_template

chat_template: phi_3_5

field_messages: messages

message_field_role: role

message_field_content: content

train_on_split: train

roles:

system:

- system

user:

- user

assistant:

- assistant

```

Before starting fine-tuning, we used axolotl's preprocessing to ensure the input training data is consistent. We loaded snapshots of the preprocessed datasets and manually inspected them in a code editor for accuracy.

Training process

We started by loading the base model microsoft/Phi-3.5-mini-instruct in half-precision bfloat16. For supervised finetuning, we used RSLoRa with rank 256 and alpha 128 values. [20,21]

Our finetuning ran for five epochs, employing a cosine scheduler with an extended warmup period. We opted for a low learning rate and incorporated Lora dropout and slight weight decay to prevent overfitting. The finetuning process used the dataset's training split, while the validation split was used to monitor evaluation loss. Post-training, we tested on a separate held-out test split that remained unseen during training.

We also experimented with the DoRA method but found no improvement over RSLoRa. Due to hardware limitations, we had to run DoRA with a smaller batch size, which may have negatively impacted the results. Additionally, DoRA is 20-30% slower than RSLoRa, limiting the number of experiments we could conduct within a given timeframe. [22,23]

Post-training

After finetuning, we benchmarked the checkpoints using our held-out and out-of-domain datasets to identify the best-performing model. We selected the checkpoint at finetuning step 231, corresponding to approximately 3.7 epochs out of the total 5 training epochs.

Some checkpoints showed complementary performance on the held-out and out-of-domain datasets. We attempted to merge these checkpoints using the PEFT library's TIES and DARE merging methods. However, this did not result in clear performance improvements, so we did not pursue it further. [24-26]

We used PEFT and transformers libraries to merge the trained LoRA adapter in F32 format into the base model in bfloat16 format, resulting in a bfloat16 model. At this step, we explicitly set flash_attention_2 as the attention backend. [27-29]

The fine-tuning hyperparameters are provided in Appendix C.

Quantization

We offer two quantization options: AWQ and GGUF. To create the quantized models, we used the AutoAWQ library and Llama.cpp. [30-33]

Additionally, Microsoft provides comprehensive instructions for quantizing the Phi 3.5 model architecture, which also apply to Flow Judge. [34]

Inference

Hardware requirements

To run Flow Judge efficiently, ensure your hardware meets the following requirements:

- Modern GPU with at least 4 GB VRAM (e.g., NVIDIA RTX series)

- Minimum of 8 GB of system memory

- At least 10GB of free storage for model files and dependencies.

By default, the model uses flash attention, requiring certain GPU hardware types to run. If you want to run the model on NVIDIA V100 or earlier generation GPUs choose attn_implementation="eager".

Inference: how to run Flow Judge

To run the Flow Judge model for inference, you can use our flow-judge library. Here's a quick-start example:

# Read the sample data

import json

from flow_judge.models.model_factory import ModelFactory

from flow_judge.flow_judge import EvalInput, FlowJudge

from flow_judge.metrics import RESPONSE_FAITHFULNESS_5POINT

from IPython.display import Markdown, display

# Create a model using ModelFactory

model = ModelFactory.create_model("Flow-Judge-v0.1-AWQ")

# Initialize the judge

faithfulness_judge = FlowJudge(

metric=RESPONSE_FAITHFULNESS_5POINT,

model=model

)

# Load data

with open("sample_data/csr_assistant.json", "r") as f:

data = json.load(f)

# Create a list of inputs and outputs

inputs_batch = [

[

{"user_instructions": sample["user_instructions"]},

{"customer_issue": sample["customer_issue"]},

{"context": sample["context"]}

]

for sample in data

]

outputs_batch = [sample["response"] for sample in data]

# Create a list of EvalInput

eval_inputs_batch = [EvalInput(inputs=inputs, output=output) for inputs, output in zip(inputs_batch, outputs_batch)]

# Run the batch evaluation

results = faithfulness_judge.batch_evaluate(eval_inputs_batch, save_results=False)

flow-judge supports multiple model types, including Hugging Face Transformers and vLLM, and offers various pre-defined evaluation metrics. For more advanced usage, custom metrics, and batch evaluations, check out the flow-judge repository.

Performance benchmarks

Flow Judge, running in bfloat16 precision, delivers robust performance on a single NVIDIA 4090 GPU. We benchmarked the model on the halubench_halueval dataset in lm-evaluation-harness, utilizing vllm 0.6.1.post2 and vllm-flash-attn 2.6.1 libraries. [35]

The model processes at an average speed of around 1000 tokens per second, achieving top input speeds of 5870 tokens per second and an output speed of 1045 tokens per second, with throughput accelerating towards the end. Running through the dataset in 21 minutes and 46 seconds.

We find that the AWQ version quantized to 4-bit using GEMM-kernel runs in 18 minutes and 35 seconds on the same setup and scores similar scores in halubench_halueval task, displaying only very slight degradation.

Inference and evaluation results comparison

Flow Judge in bfloat16:

| Processing time | 21 minutes 46 seconds |

| Token input speed | 5870 tokens/s |

| Output speed | 1045 tokens/s |

Evaluation metrics for bfloat16 in halubench_halueval task:

| Metric | Value |

|---|---|

| Accuracy | 0.8589 |

| F1 Binary | 0.8609 |

| Kendall Tau Corr | 0.7181 |

| Pearson Corr | 0.7181 |

| Precision Binary | 0.8469 |

| Recall Binary | 0.8754 |

| Spearman Corr | 0.7181 |

Flow Judge in AWQ 4-bit (GEMM) quantized:

| Processing time | 18 minutes 35 seconds |

| Token input speed | 6881 tokens/s |

| Output speed | 1202 tokens/s |

Evaluation metrics for AWQ 4-bit (GEMM) in halubench_halueval task:

| Metric | Value |

|---|---|

| Accuracy | 0.8568 |

| F1 Binary | 0.8641 |

| Kendall Tau Corr | 0.7180 |

| Pearson Corr | 0.7180 |

| Precision Binary | 0.8209 |

| Recall Binary | 0.9121 |

| Spearman Corr | 0.7180 |

Overall, the performance of both the unquantized and quantized versions showcases the Flow Judge’s capability to process extensive datasets with ease.

Performance

Datasets

We evaluated Flow Judge on our held-out dataset and a combination of publicly available relevant benchmarks for evaluating LM judges.

Held-out test set

We evaluated Flow Judge on a held-out split of our dataset. This split contains unseen metrics during training and evaluates the correlation of Flow Judge with GPT-4o and Claude 3.5 Sonnet.

We evaluated separately on the 3 different scoring scales: Pass / Fail, 3-Likert, and 5-Likert.

RAGTruth

RAGTruth [6] is an academic dataset that studies word-level hallucinations in LLM applications using Retrieval-Augmented Generation (RAG) frameworks. It contains about 18,000 responses generated by various LLMs using RAG across different domains and tasks. These responses have been carefully annotated manually, both at the response and word levels, including assessments of hallucination intensity.

The dataset is split into three RAG tasks: Question Answering (QA), Data-to-text writing, and News Summarization. The QA and News Summarization test splits contain similar data to our training data, while the data-to-text split contains JSON inputs.

We formatted the Pass / Fail evaluations as described in the original paper and evaluated the ability of our model to detect hallucinations at the response level.

HaluEval

HaluEval [7] is another dataset designed to evaluate the ability of LM evaluators to detect hallucinations. It consists of a large collection of generated and human-annotated hallucinated samples to evaluate the performance of LLMs in recognizing hallucinations.

It contains general user queries with LLM-generated responses and task-specific examples spanning question-answering, knowledge-grounded dialogue, and text summarization.

We used the HaluEval subset from HaluBench [4], which contains 10k questions with knowledge from Wikipedia, as well as question text and ground-truth answers collected from HotpotQA.

PubMedQA

PubMedQA [36] is a specialized dataset for biomedical question-answering tasks. It is compiled from PubMed's abstracts, a comprehensive medical literature database. The dataset presents research questions that require responses in the form of "yes," "no," or "maybe." Additionally, each answer is accompanied by an extended explanation that draws supporting evidence from the provided context, offering a more detailed justification for a given response.

We used the subset from HaluBench [4] formatted as Pass / Fail, which contains additional perturbations to the original dataset to generate hallucinated answers that appear plausible but are not faithful to the context.

Covid-QA

The COVID-QA [37] dataset consists of 2k question-answer pairs annotated by volunteer biomedical experts on scientific articles related to COVID-19.

We also used the subset from HaluBench [4] formatted as Pass / Fail, which also contains perturbations.

Feedback bench

Feedback Bench is a dataset generated using the same procedure as the Feedback Collection dataset published in [2]. It contains 1,000 evaluations on a 5-Likert scale generated by GPT-4.

We evaluated the correlation of Flow Judge with a frontier model on fine-grained evaluations and compare its performance to prometheus-7b-v2.0 [3].

Note that the prometheus-7b-v2.0 model was evaluated using a reference answer as an input. However, this is usually impractical in production. Therefore, we ignored the reference answers for our evaluation of Flow Judge and baselines.

Baselines

To demonstrate the effectiveness of our fine-tuning process, we used the checkpoint of Phi-3.5-mini-instruct [17] as our primary baseline. Additionally, we selected two larger popular open-source instruction LMs as strong baselines: Llama-3.1-8B-Instruct [38] and Mistral-Nemo-Instruct-2407 [39]. We also compared our model to gpt-4o-mini.

For comparison, we obtained results using the same prompt template, formatting requirements, and a combination of hyperparameters employed for Flow Judge.

For detailed information on prompts and hyperparameters, please refer to Appendix B.

To evaluate Flow Judge's generalization capabilities, we also evaluated its performance on publicly available out-of-domain datasets. We compared these results to those of recent LM evaluators, including open models like Prometheus 2 [3] and Lynx [4] or closed-source models like Luna [5]. For these models, we report the metrics as published in their respective original papers.

Metrics

For comparisons on Pass / Fail datasets, we use precision, recall, and F1-score. Additionally, we report accuracy for HaluEval [7] and PubMedQA [36] since that was the metric reported in the Lynx [4] paper.

For comparisons on Likert scales, we use pearsonr, spearmanr, and kendall-tau correlations as reported in the Prometheus 2 [3] paper.

Results

We run our evaluations using our fork of the LM-evaluation-harness [40] by EleutherAI. We made the evaluation code and results publicly available in a private fork of the harness here.

The instructions for reproducing the evaluations can be found in the flow_judge_eval task folder. We utilized the vLLM engine to run the evaluations. The results generated by the lm-evaluation-harness are included in the results/ folder.

Held-out test sets

We evaluated our models and baselines on the held-out test sets. Since the evaluations in our dataset are obtained from consensus between GPT-4o and Claude Sonnet 3.5, the results are a measure of the correlation or agreement of the models with these reference evaluators.

Flow Judge achieved the best performance on the pass/fail held-out test set, significantly outperforming its foundation model, Phi-3.5-mini-instruct [17], and larger models like Meta-Llama-3.1-8B-Instruct [38] and Mistral-Nemo-Instruct-2407 [39]. Notably, Flow Judge obtained the highest precision and F1 score among all models tested, including the smaller version of the GPT family.

| Pass / Fail Held-out Test set | |||

| Evaluator | Precision | Recall | F1 |

| microsoft/Phi-3.5-mini-instruct | 0.685 | 1.000 | 0.813 |

| meta-llama/Meta-Llama-3.1-8B-Instruct | 0.870 | 0.982 | 0.923 |

| mistralai/Mistral-Nemo-Instruct-2407 | 0.709 | 0.994 | 0.827 |

| gpt-4o-mini | 0.834 | 1.000 | 0.910 |

| flowaicom/Flow-Judge-v0.1 | 0.940 | 0.972 | 0.955 |

For the 3-Likert and 5-Likert, we measured correlations with the reference evaluators GPT-4o and Claude 3.5 Sonnet. Flow Judge shows strong correlations with these models, surpassing its base model by an average of 18%. Despite its significantly smaller size and more limited training data, it also marginally outperforms larger open models, including the popular and very capable prometheus-eval/prometheus-7b-v2.0 [3]. Our model also shows comparable performance to gpt-4o-mini on these test sets.

| 3-Likert Held-out Test set | 5-Likert Held-out Test set | |||||

| Evaluator | pearsonr | spearmanr | kendall-tau | pearsonr | spearmanr | kendall-tau |

| microsoft/Phi-3.5-mini-instruct | 0.756 | 0.749 | 0.695 | 0.808 | 0.819 | 0.739 |

| prometheus-eval/prometheus-7b-v2.0* | - | - | - | 0.910 | 0.908 | 0.838 |

| meta-llama/Meta-Llama-3.1-8B-Instruct | 0.836 | 0.833 | 0.789 | 0.854 | 0.868 | 0.791 |

| mistralai/Mistral-Nemo-Instruct-2407 | 0.813 | 0.807 | 0.758 | 0.870 | 0.867 | 0.789 |

| gpt-4o-mini | 0.890 | 0.888 | 0.851 | 0.923 | 0.923 | 0.864 |

| flowaicom/Flow-Judge-v0.1 | 0.888 | 0.888 | 0.852 | 0.919 | 0.919 | 0.856 |

RAGTruth

In the RAGTruth QA task [6], Flow Judge achieved comparable performance to larger open models. It also performs at par with its foundation model, microsoft/Phi-3.5-mini-instruct [17].

In the RAGTruth Data-to-Text task [6], Flow Judge shows significant underperformance compared to specialized models like Luna[5], which are fine-tuned specifically for this type of data. This performance gap was anticipated, given that our training dataset did not incorporate structured inputs/outputs.

In RAGTruth Summarization [6], Flow Judge also achieves comparable performance to its baselines, achieving the highest precision score.

These results suggest that Flow Judge can generalize to tasks where the distribution of inputs and outputs is not significantly different from the training data. However, our model faces challenges in domains requiring structured data interpretation and mathematical reasoning, highlighting potential areas for future model improvements and dataset diversification.

| RAGTruth QA | RAGTruth Data-to-Text | RAGTruth Summarization | |||||||

| Evaluator | Precision | Recall | F1 | Precision | Recall | F1 | Precision | Recall | F1 |

| microsoft/Phi-3.5-mini-instruct | 0.817 | 0.963 | 0.884 | 0.356 | 1.000 | 0.525 | 0.776 | 1.000 | 0.874 |

| meta-llama/Meta-Llama-3.1-8B-Instruct | 0.844 | 0.986 | 0.910 | 0.382 | 0.537 | 0.447 | 0.797 | 0.940 | 0.863 |

| mistralai/Mistral-Nemo-Instruct-2407 | 0.821 | 0.995 | 0.900 | 0.357 | 1.000 | 0.526 | 0.775 | 1.000 | 0.873 |

| gpt-4o-mini | 0.830 | 0.966 | 0.893 | 0.398 | 0.994 | 0.569 | 0.786 | 0.997 | 0.879 |

| Luna* | 0.378 | 0.800 | 0.513 | 0.649 | 0.912 | 0.759 | 0.400 | 0.765 | 0.525 |

| RAGAS Faithfuless* | 0.312 | 0.419 | 0.357 | 0.792 | 0.508 | 0.619 | 0.642 | 0.299 | 0.408 |

| Trulens Groundedness* | 0.228 | 0.925 | 0.366 | 0.669 | 0.965 | 0.790 | 0.402 | 0.500 | 0.445 |

| flowaicom/Flow-Judge-v0.1 | 0.835 | 0.961 | 0.894 | 0.541 | 0.249 | 0.341 | 0.834 | 0.836 | 0.835 |

Accuracy for Lynx models on the RAGTruth QA subset is reported in [4]. Lynx 8B achieves 80.0% accuracy while Lynx 70B achieves 80.2%. Flow Judge matches the performance of these two models with a slightly higher accuracy of 81.2%, despite being smaller and not trained on the RAGTruth QA train set.

HaluEval, Covid-QA and PubMedQA

Our model demonstrates significant progress in the HaluEval [7] benchmark, surpassing its base Phi-3.5-mini-instruct [17] by approximately 8%. This improvement indicates enhanced capabilities in hallucination detection post fine-tuning. Flow Judge also outperforms the larger Mistral NeMo [39] and closely ranks under Llama 3.1 8B instruct [38] and gpt-4o-mini.

When compared to Lynx [4], a model fine-tuned on hallucination detection data, Flow Judge exhibited a remarkable performance comparable to Lynx's smaller version.

In the Covid-QA subset of HaluBench [4,37], Flow Judge maintains its superior performance over the open-source baselines. However, the Lynx family of models outperforms Flow Judge in terms of accuracy on this benchmark.

Flow Judge shows lower recall performance on the PubMedQA subset of HaluBench [4,36] compared to baselines. This is likely due to PubMedQA's long contexts and answers, as well as its focus on biomedical research requiring quantitative reasoning. These challenges highlight areas for improving the next models.

| HaluEval | Covid-QA | PubMedQA | ||||||||||

| Evaluator | Precision | Recall | F1 | Accuracy | Precision | Recall | F1 | Accuracy | Precision | Recall | F1 | Accuracy |

| microsoft/Phi-3.5-mini-instruct | 0.730 | 0.914 | 0.812 | 0.788 | 0.617 | 0.964 | 0.752 | 0.681 | 0.623 | 0.986 | 0.764 | 0.696 |

| meta-llama/Meta-Llama-3.1-8B-Instruct | 0.864 | 0.891 | 0.878 | 0.874 | 0.663 | 0.976 | 0.790 | 0.734 | 0.681 | 0.962 | 0.797 | 0.750 |

| mistralai/Mistral-Nemo-Instruct-2407 | 0.655 | 0.993 | 0.789 | 0.735 | 0.651 | 0.982 | 0.783 | 0.728 | 0.602 | 0.994 | 0.750 | 0.669 |

| gpt-4o-mini | 0.846 | 0.940 | 0.891 | 0.885 | 0.795 | 0.964 | 0.872 | 0.858 | 0.791 | 0.904 | 0.843 | 0.832 |

| flowaicom/Flow-Judge-v0.1 | 0.826 | 0.895 | 0.859 | 0.854 | 0.767 | 0.877 | 0.818 | 0.807 | 0.874 | 0.624 | 0.728 | 0.767 |

| gpt-4o* | - | - | - | 0.879 | - | - | - | 0.821 | - | - | - | 0.821 |

| Claude 3 Sonnet* | - | - | - | 0.845 | - | - | - | 0.829 | - | - | - | 0.829 |

| RAGAS Faithfulness* | - | - | - | 0.706 | - | - | - | 0.750 | - | - | - | 0.669 |

| Lynx 8B* | - | - | - | 0.857 | - | - | - | 0.963 | - | - | - | 0.852 |

| Lynx 70B* | - | - | - | 0.884 | - | - | - | 0.975 | - | - | - | 0.904 |

Feedback bench

Flow Judge demonstrates strong performance on the Feedback Bench dataset [2], outperforming the established baselines Phi-3.5-mini-instruct [17], Meta-Llama-3.1-8B-Instruct [38], and Mistral-Nemo-Instruct-2407 [39] across all correlation metrics. Notably, it achieves results comparable to gpt-4o-mini, showcasing its competitiveness with advanced language models.

While Prometheus-7b-v2.0 achieves the highest scores, it's important to note that Feedback Bench [2,3] is an in-domain dataset for this model, potentially giving it an advantage.

Note that the authors used reference answers for grading outputs. Since this is impractical for most production settings, we did not used these answers for reporting the results on Feedback Bench.

| Feedback bench | |||

| Evaluator | pearsonr | spearmanr | kendall-tau |

| microsoft/Phi-3.5-mini-instruct | 0.710 | 0.721 | 0.622 |

| prometheus-eval/prometheus-7b-v2.0* | 0.878 | 0.909 | 0.773 |

| meta-llama/Meta-Llama-3.1-8B-Instruct | 0.742 | 0.749 | 0.654 |

| mistralai/Mistral-Nemo-Instruct-2407 | 0.720 | 0.724 | 0.632 |

| gpt-4o-mini | 0.797 | 0.795 | 0.701 |

| flowaicom/Flow-Judge-v0.1 | 0.787 | 0.789 | 0.688 |

Summary

We demonstrate that Flow Judge achieves strong correlations with standard proprietary evaluators like GPT-4o and Claude 3.5 Sonnet on our held-out test sets, outperforming larger models in pass/fail and Likert scale evaluations, despite being a compact model fine-tuned on a small synthetic dataset.

We also showcased its ability to generalize on other benchmarks such as RAGTruth [6], subsets of HaluBench [4] and Feedback Bench [2].

While it shows some limitations in specialized tasks not emphasized in its training, Flow Judge's overall performance highlights the potential of small fine-tuned LMs as evaluators.

License

We opted for the Apache 2.0 license for Flow Judge to provide the community with an open, small yet powerful LM evaluator. Our goal is to support the wider adoption of rigorous evaluation techniques in LLM system development, making them more accessible to practitioners and researchers.

Limitations and future work

Multilingual evaluation: Flow Judge has been fine-tuned exclusively on English data. While the foundation model (Phi-3.5-mini-instruct [17]) may possess multilingual capabilities, we have not systematically evaluated Flow Judge performance in non-English contexts. We plan to explore multi-lingual LM evaluators in the future.

Long context and structured Inputs: Our training dataset encompasses a wide range of custom metrics relevant to evaluating LLM systems. However, it does not include examples with long context inputs or structured data formats such as JSON, since these are harder to synthetically generate. This limitation may impact Flow Judge's performance when evaluating responses that require processing extensive context or parsing structured input. Extending our model’s capabilities to handle these input types represents an important area for future research.

Math and coding: The current version has not been trained on specific task domains such as arithmetic problems or code evaluation. As a result, its performance in these specialized areas may be limited. Future iterations of the model should address these gaps.

Domain-specific knowledge and complex multi-step evaluations: Flow Judge may struggle with highly specialized domain knowledge or proprietary data outside the training scope of its foundation model. Additionally, evaluation tasks requiring multi-step reasoning or complex logical processes may challenge the model's capabilities. We strongly recommend conducting meta-evaluations of the model performance before deploying it in specialized or highly complex evaluation scenarios.

What’s next for Flow Judge?

We have plans to keep upgrading our model and releasing new improved versions that address the limitations described in this report.

We are also keen to receive feedback from the community, and work together to create more reliable open and specialised LM evaluators.

Together with the model, we will be releasing tutorials for anyone to use Flow Judge in AI application development with frameworks like Llama Index. Also, we will be releasing a tutorial about how to use Flow Judge for real-time monitoring.

Acknowledgments

We would like to express our gratitude to other developers in the community that created several tools that significantly contributed to the success of this project:

- Unsloth library provided essential optimizations for fine-tuning, enhancing the efficiency of our model training process.

- Hugging Face ecosystem, which offers an invaluable foundation for model building and dataset management.

- Argilla team (now HF) for its user-friendly interface, which facilitated efficient data annotation and review. Also, for building and open-sourcing distilabel, which was instrumental in enabling the creation of synthetic data pipelines.

- Maintainers of axolotl for streamlining the fine-tuning of large language models.

- Microsoft Phi team for their contributions to the Phi-3.5-mini architecture and their work on synthetic data generation. The "Textbooks Are All You Need" approach significantly influenced our model's development.

References

- [1] A. Dubey et al., ‘The Llama 3 Herd of Models’, ArXiv, vol. abs/2407.21783, 2024.

- [2] S. Kim et al., "Prometheus: Inducing Fine-grained Evaluation Capability in Language Models," arXiv preprint arXiv:2310.08491, 2023.

- [3] S. Kim et al., "Prometheus 2: An Open Source Language Model Specialized in Evaluating Other Language Models," arXiv preprint arXiv:2405.01535, 2024.

- [4] S. S. Ravi, B. Mielczarek, A. Kannappan, D. Kiela, and R. Qian, "Lynx: An Open Source Hallucination Evaluation Model," arXiv preprint arXiv:2407.08488, 2024.

- [5] M. Belyi, R. Friel, S. Shao, and A. Sanyal, "Luna: An Evaluation Foundation Model to Catch Language Model Hallucinations with High Accuracy and Low Cost," arXiv preprint arXiv:2406.00975, 2024.

- [6] Y. Wu et al., "RAGTruth: A Hallucination Corpus for Developing Trustworthy Retrieval-Augmented Language Models," in Annual Meeting of the Association for Computational Linguistics, 2023.

- [7] J. Li, X. Cheng, W. X. Zhao, J. Nie, and J.-R. Wen, "HaluEval: A Large-Scale Hallucination Evaluation Benchmark for Large Language Models," arXiv preprint arXiv:2305.11747, 2023.

- [8] C. Xu et al., "WizardLM: Empowering Large Language Models to Follow Complex Instructions," arXiv preprint arXiv:2304.12244, 2023.

- [9] Z. Wang et al., "CodecLM: Aligning Language Models with Tailored Synthetic Data," arXiv preprint arXiv:2404.05875, 2024.

- [10] C. Xu et al., "WizardLM: Empowering Large Language Models to Follow Complex Instructions," arXiv preprint arXiv:2304.12244, 2023.

- [11] N. Ding et al., "Enhancing Chat Language Models by Scaling High-quality Instructional Conversations," in Conference on Empirical Methods in Natural Language Processing, 2023.

- [12] K. Saito, A. Wachi, K. Wataoka, and Y. Akimoto, ‘Verbosity Bias in Preference Labeling by Large Language Models’, ArXiv, vol. abs/2310.10076, 2023.

- [13] Y. Dubois, B. Galambosi, P. Liang, and T. Hashimoto, ‘Length-Controlled AlpacaEval: A Simple Way to Debias Automatic Evaluators’, ArXiv, vol. abs/2404.04475, 2024.

- [14] “Phi-3CookBook/md/01.Introduce/Phi3Family.md at c53fa9fda5df6a42476dd8ba5f1ccb446dd1608c · microsoft/Phi-3CookBook,” GitHub. Accessed: Sep. 16, 2024. [Online]. Available: https://github.com/microsoft/Phi-3CookBook/blob/c53fa9fda5df6a42476dd8ba5f1ccb446dd1608c/md/01.Introduce/Phi3Family.md

- [15] S. Gunasekar et al., “Textbooks Are All You Need,” Oct. 02, 2023, arXiv: arXiv:2306.11644. doi: 10.48550/arXiv.2306.11644.

- [16] M. Abdin et al., “Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone,” Aug. 30, 2024, arXiv: arXiv:2404.14219. doi: 10.48550/arXiv.2404.14219.

- [17] “microsoft/Phi-3.5-mini-instruct · Hugging Face.” Accessed: Sep. 16, 2024. [Online]. Available: https://huggingface.co/microsoft/Phi-3.5-mini-instruct

- [18] H. Touvron et al., “LLaMA: Open and Efficient Foundation Language Models,” Feb. 27, 2023, arXiv: arXiv:2302.13971. doi: 10.48550/arXiv.2302.13971.

- [19] “axolotl-ai-cloud/axolotl: Go ahead and axolotl questions.” Accessed: Sep. 16, 2024. [Online]. Available: https://github.com/axolotl-ai-cloud/axolotl

- [20] D. Kalajdzievski, “A Rank Stabilization Scaling Factor for Fine-Tuning with LoRA,” Nov. 27, 2023, arXiv: arXiv:2312.03732. doi: 10.48550/arXiv.2312.03732.

- [21] S. R. PhD, “Practical Tips for Finetuning LLMs Using LoRA (Low-Rank Adaptation).” Accessed: Sep. 16, 2024. [Online]. Available: https://magazine.sebastianraschka.com/p/practical-tips-for-finetuning-llms

- [22] S. R. PhD, “Improving LoRA: Implementing Weight-Decomposed Low-Rank Adaptation (DoRA) from Scratch.” Accessed: Sep. 16, 2024. [Online]. Available: https://magazine.sebastianraschka.com/p/lora-and-dora-from-scratch

- [23] S.-Y. Liu et al., “DoRA: Weight-Decomposed Low-Rank Adaptation,” Jul. 09, 2024, arXiv: arXiv:2402.09353. doi: 10.48550/arXiv.2402.09353.

- [24] huggingface/peft. (Sep. 16, 2024). Python. Hugging Face. Accessed: Sep. 16, 2024. [Online]. Available: https://github.com/huggingface/peft

- [25] L. Yu, B. Yu, H. Yu, F. Huang, and Y. Li, “Language Models are Super Mario: Absorbing Abilities from Homologous Models as a Free Lunch,” Jun. 13, 2024, arXiv: arXiv:2311.03099. doi: 10.48550/arXiv.2311.03099.

- [26] P. Yadav, D. Tam, L. Choshen, C. Raffel, and M. Bansal, “TIES-Merging: Resolving Interference When Merging Models,” Oct. 26, 2023, arXiv: arXiv:2306.01708. doi: 10.48550/arXiv.2306.01708.

- [27] T. Wolf et al., Transformers: State-of-the-Art Natural Language Processing. (Oct. 2020). Python. Association for Computational Linguistics. Accessed: Sep. 16, 2024. [Online]. Available: https://www.aclweb.org/anthology/2020.emnlp-demos.6

- [28] T. Dao, D. Y. Fu, S. Ermon, A. Rudra, and C. Ré, “FlashAttention: Fast and Memory-Efficient Exact Attention with IO-Awareness,” Jun. 23, 2022, arXiv: arXiv:2205.14135. doi: 10.48550/arXiv.2205.14135.

- [29] Dao-AILab/flash-attention. (Sep. 16, 2024). Python. Dao AI Lab. Accessed: Sep. 16, 2024. [Online]. Available: https://github.com/Dao-AILab/flash-attention

- [30] J. Lin et al., “AWQ: Activation-aware Weight Quantization for LLM Compression and Acceleration,” Jul. 18, 2024, arXiv: arXiv:2306.00978. doi: 10.48550/arXiv.2306.00978.

- [31] Casper, casper-hansen/AutoAWQ. (Sep. 16, 2024). P*microsoftython. Accessed: Sep. 16, 2024. [Online]. Available: https://github.com/casper-hansen/AutoAWQ

- [32] G. Gerganov, ggerganov/ggml. (Sep. 16, 2024). C++. Accessed: Sep. 16, 2024. [Online]. Available: https://github.com/ggerganov/ggml

- [33] “GGUF.” Accessed: Sep. 16, 2024. [Online]. Available: https://huggingface.co/docs/hub/en/gguf

- [34] “Phi-3CookBook/md/08.Update/Phi35/021.UsingLlamacppQuantifyingPhi35.md at main · microsoft/Phi-3CookBook,” GitHub. Accessed: Sep. 16, 2024. [Online]. Available: https://github.com/microsoft/Phi-3CookBook/blob/main/md/08.Update/Phi35/021.UsingLlamacppQuantifyingPhi35.md

- [35] vllm-project/vllm. (Sep. 17, 2024). Python. vLLM. Accessed: Sep. 17, 2024. [Online]. Available: https://github.com/vllm-project/vllm

- [36] Q. Jin, B. Dhingra, Z. Liu, W. W. Cohen, and X. Lu, "PubMedQA: A Dataset for Biomedical Research Question Answering," in Conference on Empirical Methods in Natural Language Processing, 2019.

- [37] T. Möller, A. Reina, R. Jayakumar, and M. Pietsch, ‘COVID-QA: A Question Answering Dataset for COVID-19’, 2020.

- [38] “meta-llama/Meta-Llama-3.1-8B-Instruct · Hugging Face.” Accessed: Sep. 16, 2024. [Online]. Available: https://huggingface.co/meta-llama/Meta-Llama-3.1-8B-Instruct

- [39] “mistralai/Mistral-Nemo-Instruct-2407 · Hugging Face.” Accessed: Sep. 16, 2024. [Online]. Available: https://huggingface.co/mistralai/Mistral-Nemo-Instruct-2407

- [40] L. Gao et al., ‘A framework for few-shot language model evaluation’. Zenodo, 07 2024.

Appendix A. Full list of seed metrics and their definitions

Response Quality

| Response correctness | Measures the accuracy of a system's generated answer by measuring whether it matches the provided reference or ground truth answer for a given query. |

| Response faithfulness | Measures whether the generated response is consistent with and supported by the context information, essentially checking for any hallucinated or fabricated content that deviates from the provided context. |

| Response relevance | Measures how well a generated response addresses the query by measuring the relevance and pertinence of the response to the query, without extraneous or irrelevant information. |

| Response conciseness | Measures whether the response is concise and free of unnecessary information. Ensures the response is to the point and efficiently addresses the query. |

| Response novelty | Measures the originality and uniqueness of the response. Evaluates whether the response presents new ideas or perspectives. |

Retrieval Quality

| Context Utilization | Measures how complete the generated response is for the query specified given the information provided in the context. It measures if the generated response has sufficiently used the retrieved context to answer the query. |

| Context Relevance | Measures whether the retrieved context is relevant and sufficient to respond to the query. |

| Context recall | Measures the extent to which the context aligns with the reference response. |

| Context precision | Measures whether the AI system accurately identifies and prioritizes relevant details in the context provided, which is important for generating specific and useful responses and avoiding vague or general responses. |

Bias and Toxicity

| Toxicity | Detects whether the response contains harmful, offensive, or inappropriate content. Ensures the response adheres to ethical and safety standards. |

| Bias | Identifies any gender, racial, or political bias in the response. Ensures the generated content is unbiased and fair. |

Style and Guideline Adherence

| Tonality compliance | Measures whether the response matches the required persona's tone. Ensures that the response adheres to the desired tone and style. |

| Guideline compliance | Measures adherence to specific guidelines provided for generating the response. Ensures the response follows the predefined rules and standards. |

| Style transfer accuracy | Measures how accurately the AI system can change the writing style of a given text without losing the original meaning or important information. |

| Content preservation accuracy | Measures how well the AI system retains the key information and context during the style transfer process or paraphrasing. |

| Stylistic compliance | Measures the ability of the AI system to incorporate specific stylistic elements such as formality, humor, or technical jargon appropriately. |

Language

| Grammatical correctness | Measures the grammatical correctness and fluency of the generated text, ensuring high-quality and professional communication. |

| Readability | Measures the variety and complexity of sentence structures of the text generated by the AI system, contributing to more engaging and readable text. |

Miscellaneous

| Sub-query decomposition | Measures the completeness and accuracy of sub-questions generated from a main query. Ensures that all aspects of the main question are covered. |

| Query reformulation | Measures how accurately the variations of the query represent the same question. |

| Instruct-following ability | Measures how accurately the AI system followed the user instructions in a specific task. |

| Instruct-following ability with context | Measures how accurately the AI system followed the user instructions in a specific task, considering the context. |

| Open-ended generation ability | Measures the ability of the AI system to propose solutions to open-ended problems that need to be solved for the user. |

| Logical reasoning | Measures the ability of the AI system to apply logical steps and reasoning to arrive at a conclusion requested by the user. |

| Decision making | Measures the ability of the AI system to choose the best course of action among several alternatives proposed by the user or stated in the context. |

| Strategic planning | Measures the ability of the AI system to develop and implement long-term plans to achieve the user's goals. |

Appendix B. Prompt template and hyperparameters

# GOAL

Your job is to evaluate a task carried out by an AI system powered by a large language model. You will be provided with the inputs and output of the task, as well as the evaluation criteria and scoring rubric. Your task is to evaluate the output of the AI system based on the evaluation criteria and scoring rubric provided.

# INPUT/s

Below are the inputs required for performing the task:

<inputs>

{INPUTS}

</inputs>

# OUTPUT

Below is the output of the task:

<output>

{OUTPUT}

</output>

# EVALUATION CRITERIA AND SCORING RUBRIC

Here are the evaluation criteria and the rubric that you need to use for evaluating the task:

<evaluation_criteria>

{EVALUATION_CRITERIA}

</evaluation_criteria>

<scoring_rubric>

{RUBRIC}

</scoring_rubric>

# INSTRUCTIONS FOR THE EVALUATION

1. Understand the task and criteria: Familiarize yourself with the task to be evaluated. Review the evaluation criteria and scoring rubric to understand the different levels of performance and the descriptions for each score.

2. Review the inputs and output: Look at the inputs provided for the task. Examine the output generated from completing the task.

3. Compare output to score descriptions: Compare the output against the criteria and score descriptions in the scoring rubric. For each criterion, decide which description best matches the output.

4. After comparing the output to the score descriptions, pay attention to the small details that might impact the final score that you assign. Sometimes a small difference can dictate the final score.

5. Write verbal feedback justifying your evaluation that includes a detailed rationale, referring to specific aspects of the output and comparing them to the rubric.

6. Assign a final score based on the scoring rubric.

## FORMAT FOR THE EVALUATION

- Write the verbal feedback inside <feedback> tags without any additional surrounding text.

- Write the numeric score inside <score> tags, without any additional surrounding text and always after the feedback.

Please accurately evaluate the task. Strictly adhere to the evaluation criteria and rubric.

Flow Judge user prompt template with inputs

# GOAL

Your job is to evaluate a task carried out by an AI system powered by a large language model.

You will be provided the output of the task, as well as the evaluation criteria and scoring rubric. Your task is to evaluate the output of the AI system based on the evaluation criteria and scoring rubric provided.

# OUTPUT

Below is the output of the task:

<output>

{OUTPUT}

</output>

# EVALUATION CRITERIA AND SCORING RUBRIC

Here are the evaluation criteria and the rubric that you need to use for evaluating the task:

<evaluation_criteria>

{EVALUATION_CRITERIA}

</evaluation_criteria>

<scoring_rubric>

{RUBRIC}

</scoring_rubric>

# INSTRUCTIONS FOR THE EVALUATION

1. Understand the task and criteria: Familiarize yourself with the task to be evaluated. Review the evaluation criteria and scoring rubric to understand the different levels of performance and the descriptions for each score.

2. Review the output: Examine the output generated from completing the task.

3. Compare output to score descriptions: Compare the output against the criteria and score descriptions in the scoring rubric. For each criterion, decide which description best matches the output.

4. After comparing the output to the score descriptions, pay attention to the small details that might impact the final score that you assign. Sometimes a small difference can dictate the final score.

5. Write verbal feedback justifying your evaluation that includes a detailed rationale, referring to specific aspects of the output and comparing them to the rubric.

6. Assign a final score based on the scoring rubric.

## FORMAT FOR THE EVALUATION

- Write the verbal feedback inside <feedback> tags without any additional surrounding text.

- Write the numeric score inside <score> tags, without any additional surrounding text and always after the feedback.

Please accurately evaluate the task. Strictly adhere to the evaluation criteria and rubric.

Flow Judge user prompt template without inputs

# GOAL

Your job is to evaluate a task carried out by an AI system powered by a large language model.

You will be provided with the inputs and output of the task, as well as the evaluation criteria and scoring rubric. Your task is to evaluate the output of the AI system based on the evaluation criteria and scoring rubric provided.

# INPUT/s

Below are the inputs required for performing the task:

<inputs>

<customer_query>

Subject: Issues with recent order #12345

Hello,

I recently received my order #12345, but I'm having a few problems:

1. The blue t-shirt I ordered is actually green. Can I exchange it?

2. The phone case doesn't fit my iPhone 13 Pro Max. The website said it was compatible.

3. I was charged for express shipping, but it took over a week to arrive. Can I get a refund on the shipping cost?

Please let me know how we can resolve these issues. I've been a loyal customer for years and this is the first time I've had problems like this.

Thank you,

Jane Smith

</customer_query>

</inputs>

# OUTPUT

Below is the output of the task:

<output>

Dear Jane Smith,

Thank you for reaching out about the issues with your recent order #12345. We sincerely apologize for the inconvenience you've experienced. Let me address each of your concerns:

1. T-shirt color discrepancy: We're sorry about the mix-up with the t-shirt color. We'd be happy to exchange it for the correct blue t-shirt. I'll send you a prepaid return label for the green shirt, and we'll ship out the blue one as soon as we receive the return.

2. Phone case compatibility: We apologize for the confusion regarding the phone case compatibility. Our website information should have been more accurate. We'll gladly accept a return of the incompatible case and issue a full refund for it. Additionally, I can recommend a case that will fit your iPhone 13 Pro Max if you're interested.

3. Shipping charge: You're absolutely right about the shipping charge. Since the delivery didn't meet our express shipping standards, we will refund the entire shipping cost to your original payment method. This refund should process within 3-5 business days.

To initiate these resolutions, please reply to this email confirming that you'd like to proceed with the t-shirt exchange and phone case return. Once confirmed, I'll send you the necessary return labels and process the shipping refund immediately.

We truly value your loyalty as a long-time customer and sincerely regret that you've encountered these issues. Rest assured, we're taking steps to prevent similar problems in the future. As a gesture of goodwill, we'd like to offer you a 20% discount on your next order.

If you have any further questions or concerns, please don't hesitate to reach out. We're here to ensure your complete satisfaction.

Best regards,

Alex Johnson

Customer Service Representative

</output>

# EVALUATION CRITERIA AND SCORING RUBRIC

Here are the evaluation criteria and the rubric that you need to use for evaluating the task:

<evaluation_criteria>

How well the response addresses the specific issues raised in the customer's query?

</evaluation_criteria>

<scoring_rubric>

- Score 1: The response completely fails to address the customer's needs and ignores the specific issues raised.

- Score 2: The response barely addresses the customer's query and misses most of the specific issues raised.

- Score 3: The response partially addresses the customer's query, touching on some of the specific issues but leaving others unaddressed.

- Score 4: The response adequately addresses most aspects of the customer's query and the specific issues raised.

- Score 5: The response fully and comprehensively addresses all aspects of the customer's query and all specific issues raised in a highly satisfactory manner.

</scoring_rubric>

# INSTRUCTIONS FOR THE EVALUATION

1. Understand the task and criteria: Familiarize yourself with the task to be evaluated. Review the evaluation criteria and scoring rubric to understand the different levels of performance and the descriptions for each score.

2. Review the inputs and output: Look at the inputs provided for the task. Examine the output generated from completing the task.

3. Compare output to score descriptions: Compare the output against the criteria and score descriptions in the scoring rubric. For each criterion, decide which description best matches the output.

4. After comparing the output to the score descriptions, pay attention to the small details that might impact the final score that you assign. Sometimes a small difference can dictate the final score.

5. Write verbal feedback justifying your evaluation that includes a detailed rationale, referring to specific aspects of the output and comparing them to the rubric.

6. Assign a final score based on the scoring rubric.

## FORMAT FOR THE EVALUATION

- Write the verbal feedback inside <feedback> tags without any additional surrounding text.

- Write the numeric score inside <score> tags, without any additional surrounding text and always after the feedback.

Please accurately evaluate the task. Strictly adhere to the evaluation criteria and rubric.

Hyperparameters chosen for evaluation for Flow Judge and baselines

| temperature | 0.1 |

| top_p | 0.95 |

| stop (until) | <|endoftext|> |

| max_seq_len | 8192 |

Appendix C. Fine-tuning details

Hardware and software versions of the setup

For fine-tuning, we used the Axolotl framework from Wing Lian.

Our hardware setup comprised three 4090 GPUs.

Key software versions included:

| Software | Version |

|---|---|

| CUDA | 1.21 |

| PEFT | 0.12.0 |

| Transformers | 4.44.2 |

| PyTorch | 2.4.1+cu121 |

| Datasets | 2.20.0 |

| Tokenizers | 0.19.1 |

| Axolotl | 3.4.1 |

Training hyperparameters

The following hyperparameters were used during training:

| Hyperparameter | Value |

|---|---|

| learning_rate | 1e-05 |

| train_batch_size | 1 |

| eval_batch_size | 1 |

| seed | 42 |

| distributed_type | multi-GPU |

| num_devices | 3 |

| gradient_accumulation_steps | 4 |

| total_train_batch_size | 12 |

| total_eval_batch_size | 3 |

| optimizer | Adam with betas=(0.9, 0.999) and epsilon=1e-08 |

| lr_scheduler_type | cosine |

| lr_scheduler_warmup_steps | 62 |

| num_epochs | 5 |

For rsLoRA:

| Hyperparameter | Value |

|---|---|

| lora_r | 256 |

| lora_alpha | 128 |

| lora_dropout | 0.15 |